You can remove almost every other facet of the VM however it has to "live" somewhere (even if that somewhere is a live datastream as some of you would point out). This much appears clear to everyone, however what does not come immediately leaping out is what the requirements are for the application footprint you desire to support.

The Basics of Analysis

The services you will support within the virtual environment will have many variables or tunables, however the keys to success are the following:

- What does the I/O profile look like

- Will the deployment be single or multiple site

- What does my backup solution need to ensure 100% coverage

You will have to do your due diligence to create a list of applications you are to run on it and analyze the use cases for the storage. Some read/write in large sequenced blocks (highly sequential data like file shares, backup servers, deduplication, etc.) some read/write in highly random ways (highly random access like database, hpc functions, etc.) and some have a good mix like email systems.

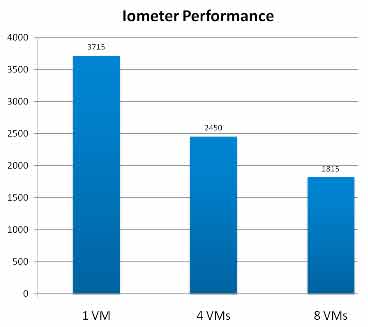

You will tend to pay more for performance i/o and if your purpose is to deploy servers you are looking at between 40 iops and thousands dependant upon application. Heavy read write databases perform differently than heavy read i/o for web servers, however some of the basics are mitigated by it being on a virtual machine, its actually any time you need to access (read or write) data that the i/o (input / output) comes into play.

For a in-depth picture on the problem here see take a look at http://lb.vg/DI272

The bottom line here is, that if you have an environment where the servers are sized appropriately and storage sizing seems to be adequate but performance isn't all it should be, then it may be time to break into ESXTOP or TOP or something to analyze waits on your CPU to determine if storage is the bottleneck. Dollars to doughnuts it is...

Single or Multi Site

In the dark ages of virtualization (like 5 years ago) when vMotion was in its infancy and untrusted, and Storage vMotion had yet to make its debut, site replication options were very limited... and then SAN companies just magically got a clue and it created a day of ubiquitous data mirroring that we all enjoy today... oh wait, I was inhaling white board markers again, no that never happened... ever.

Today your storage choices may be more numerous than before, but they still make use of the same mainly logical layouts and presentation of disks through all of the same players with FCoE (and begrudgingly infiniband) as notable newcomer to the fray. Enter CISCO a company I have much maligned in many roles and championed in others has gotten a major clue and started the convergence movement which will mean great gains in the Virtualization space as storage and data become parallel resources instead of disparate islands of consternation. But convergence is still not where it needs to be and even CISCOs Nexus methodology has run into some convergence challenges, so the drums go forward, but where does that leave us...

Oh yeah... silos of data... the same as it has been since the invention of storage media. EMC and NetApp both have reasonably affordable cross-site mirroring technologies (Replicator and SnapMirror respectively). These types of cross site replication allow access to data in seconds from the detected failure which could speed replication and site recovery. Otherwise you can ship vms and snapshots manually and recover from log backups of databases so there are options. Just consider your position as it regards storage lest ye pay the price in the future when you want to roll out SRM or a similar technology.

(An additional notable is vCenter Heartbeat for Vcenter and its Database server, a great cross site tech.)

Backups / Disaster Recovery and the like

From the previous paragraphs on site model it should be clear that the data is all over the place in a typically sprawled multi site deployment. It is crucial to understand how you intend to back up the VMs in your environment, and how your storage platform takes a role in that architecture. It is conceivable, albeit the likelihood has dropped considerably in the past few years, that you may have a tape system attached directly to one of your ESX servers, so what other options do people use?

- Network Backup Software (Agent or Agentless which includes NetBackup, TSM and the like)

- Storage based backups (NetApp Snap technology, as well as others from various storage companies)

- Virtualization technology based (such as VMware Data Recovery or Veeam's Software Backup and Replication )

Conclusion

It is quite easy to develop a comprehensive plan cheaply using free or negligible cost tools, however the gap of skills required to do that often creates a desire in upper managment to throw an application or technology at it. IT is up to you as the architect to ascertain to true requirements both business and technical and determine the vision for the virtualized infrastructure. Without a cohesive approach you will end up deploying virtualization as a series of projects, and in conjunction with one of my friends and peers Steven Beaver from Vmware Communities fame suggests in his tag line "Virtualization is a journey, NOT a project"

Good luck and feel encouraged to hit me back with any storage related horror stories.

-Virt